Just over three years ago, ChatGPT launched. By now, according to the breathless vendor keynotes, we should all be living in fully automated luxury communism, with AI agents handling our analytics while we sip cocktails on the beach. You’re still here, though, which means either the revolution got delayed or the promises were oversold.

Here’s what actually happened: The AI labs have been improving model capabilities faster than most data leaders can keep up with. Meanwhile, the BI tool vendors are doing the opposite. They’re raising prices on increasingly disappointing capabilities, extracting value from captive customers while the technology landscape shifts underneath them.

If you’re heading into the holiday break and you’ve got a Tableau or Power BI renewal coming up in 2026, this is for you. A deep dive on several interconnected trends reshaping the analytics stack, what you can do differently next year, and a few examples of genuinely useful tools worth looking at during your upcoming downtime.

The short version: legacy BI tools are under pressure from multiple directions at once. The response from Salesforce and Microsoft hasn’t been innovation. It’s been price extraction and disappointing AI bolt-ons that don’t solve the underlying problems.

Let me show you what’s actually happening.

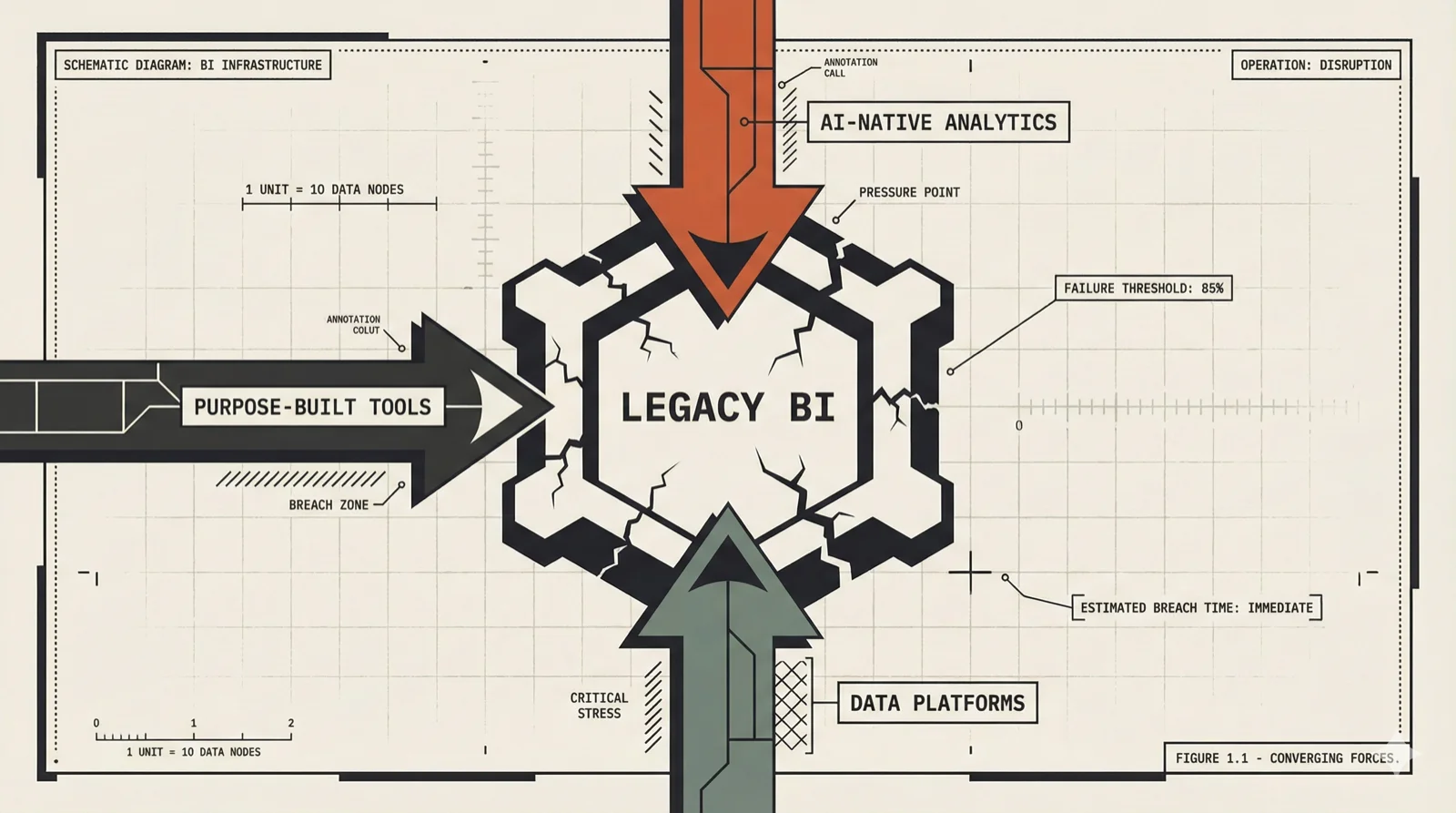

Legacy BI Under Siege

Traditional BI vendors face simultaneous threats from multiple directions. None of them look survivable long-term.

From above, AI-native analytics tools are consuming dashboards the way they’re consuming everything else: as context for reasoning, not destinations for users. Tools like TextQL let you ask “Show me Q4 revenue by region with year-over-year comparison” and get an answer, along with natural follow-ups like “Which regions are trending down?” and “What’s driving the decline?” The traditional workflow (logging in, finding the right dashboard, clicking through tabs and filters, reconstructing the answer from fragments) starts to look like unnecessary friction. The BI tool becomes infrastructure. Important but invisible, like a database.

From below, data platforms are moving up the stack. Databricks AI/BI with Genie. Snowflake Cortex. These platforms already store your data and define your metrics. They’re realizing they can deliver insights directly to users without a separate BI layer in between. Why export to a visualization tool when the platform can answer questions and generate visualizations natively?

From the side, best-in-breed analytics tools like Sigma are solving the problems that Power BI and Tableau stopped prioritizing years ago. Purpose-built data applications that handle complete workflows, not just visualizations. Flexible exploration that doesn’t require filing tickets with the data team. Tools that people actually choose to use when they have a choice.

Your BI tool is becoming a middleman in a market that’s eliminating middlemen.

This puts legacy vendors in an impossible position. They’re being commoditized from above, disintermediated from below, and out-innovated from the side. The strategic response? Raise prices, bundle aggressively, bolt on underwhelming AI features, and hope customers don’t notice the walls closing in.

Why the Pressure Is Structural

To understand why this is happening now, you need to see the analytics shift underneath the market dynamics.

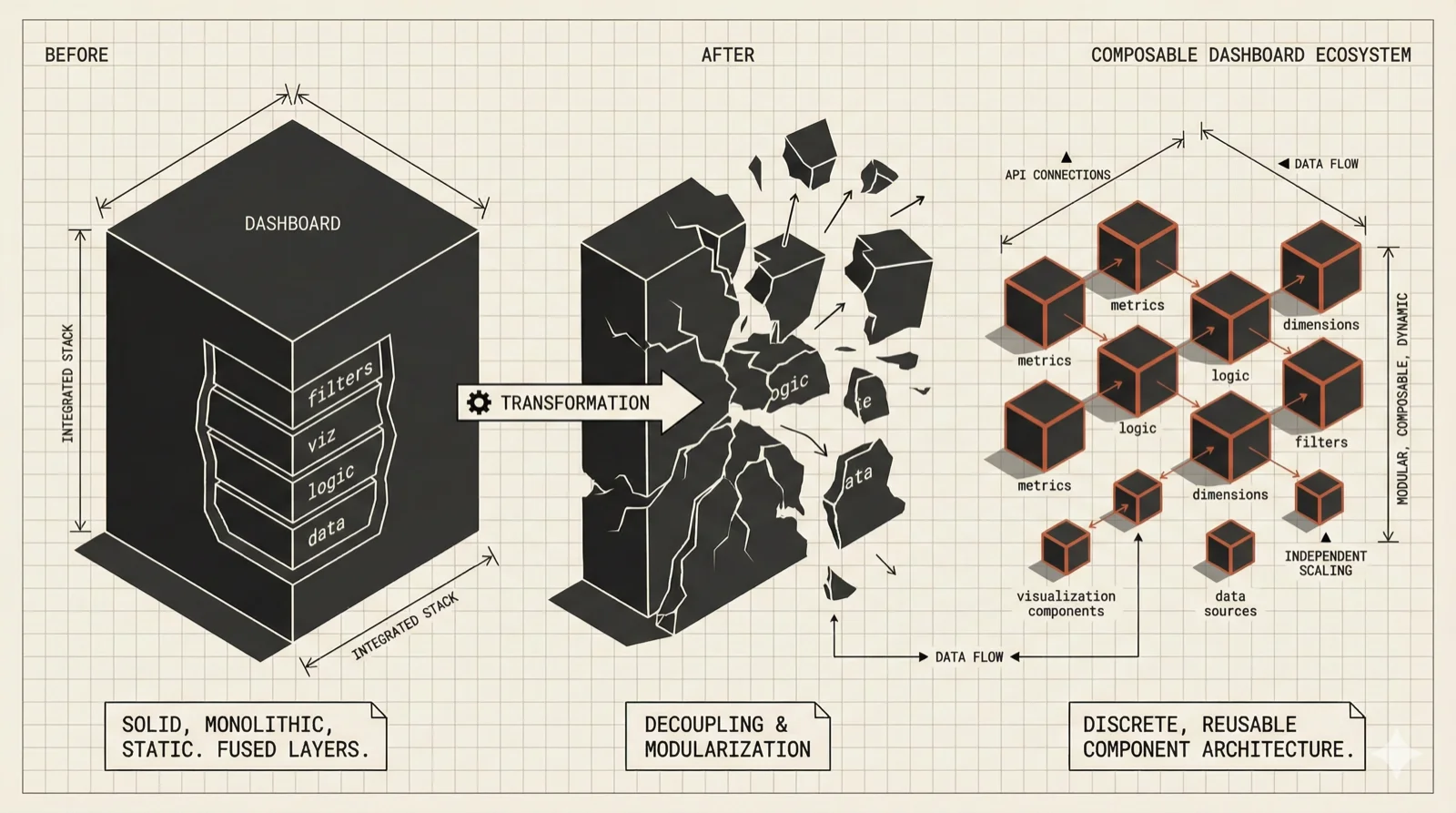

The dashboard isn’t dead, but it’s fundamentally changing. What used to be a monolithic artifact (a carefully designed collection of data, business logic, goals, filter paths, and visualizations) is decomposing into component pieces: metrics.

These metrics are becoming machine-readable via semantic layers, not just human-readable via dashboards. When a metric is properly defined with clear business logic, calculation rules, and dimensional context, it becomes something an AI agent can consume and reason about. It stops being just a number on a screen and becomes queryable, composable, reusable.

This is why semantic layers emerged so quickly. Companies faced metric chaos: the same KPI calculated differently across dozens of dashboards, nobody sure which version was correct. The semantic layer provides a centralized place to define what “revenue” actually means, how “customer churn” should be calculated, which dimensions matter.

Then something interesting happened. What started as specialized tooling (dbt Metrics, Cube, MetriQL) got adopted as a feature by data platforms and BI tools themselves. Snowflake, Databricks, Tableau, Power BI all added semantic layer capabilities. The pattern commoditized.

Here’s what this means: once your metrics are properly defined, the visualization layer becomes increasingly optional. Different consumption patterns make sense for different users and use cases. Sometimes that’s a traditional dashboard. Sometimes it’s a conversational AI interface. Sometimes it’s the data platform itself. Sometimes it’s a purpose-built application.

The BI vendors know this. They’re all rushing to add semantic layer features. But it’s also why their core value proposition is eroding. If the real asset is the metric layer, and if that layer can be consumed by AI interfaces, data platforms, and purpose-built applications equally well, what exactly are you paying the BI vendor for?

The New Economics of Building

Meanwhile, another shift is reshaping what analytics teams can actually build. The capabilities of agentic coding tools mean the time and cost of creating code has dropped dramatically. There are still complexities around review, deployment, integration with other systems. Design, testing, iteration, maintenance. But the pure mechanics of writing code? Increasingly automated.

This changes the economics of your analytics infrastructure fundamentally. Data teams are building more integrations, deploying more self-managed tools, creating more custom solutions rather than buying pre-built connectors. Data engineering teams are generating dbt models faster than manual coding ever allowed.

Here’s the reversal that matters: Agentic coding has become the new no-code.

Teams that previously bought tools like Alteryx, Tableau Prep, Power Automate, Zapier, Tray.io, or Retool for speed via a wider pool of citizen developers are discovering they can move faster with code-first approaches. The abstraction layer that justified those tools (“our analysts can’t write Python”) is dissolving. Your analysts might not be able to write Python, but Claude can, and the collaboration between human intent and AI execution is often more flexible than the pre-built workflows in no-code platforms.

All of a sudden, the hidden costs of no-code tools seem more constricting than enabling. They’re hard to collaborate on. They require proprietary drag-and-drop training. They’re often black box files. We used to work around all of those limitations because the enabling technology was worth it. We don’t have to anymore.

What does this mean for the broader no-code market? They’re racing to bolt on AI-powered object generation. Type what you want, get generated outputs. But there’s a pace question: are they building faster than they’re bleeding customers to Claude plus Streamlit, or similar code-first frameworks?

The lock-in that justified enterprise no-code pricing (“we can’t leave because we’d have to rewrite everything”) looks different when rewriting takes a fraction of the time it used to.

Why This Is Happening Now

The pressures I’ve described have been building for a while. What’s changed is the capability of the models underneath.

The reasoning capabilities of frontier AI models have improved remarkably over the past year. Extended chain-of-thought means these models can stay on task longer, work through multi-step problems, and produce genuinely useful results rather than just impressive demos. Analysis isn’t usually a single question. It’s a process: querying multiple data sources, reconciling different metric definitions, checking results for errors, iterating based on what you find. Earlier AI analytics tools struggled with this extended exploration. The latest models handle it well.

If you evaluated agentic analytics tools a year ago and found them wanting, they’ve crossed some useful thresholds since then. The AI-first startups that were demoware twelve months ago are now providing genuine production value. The legacy BI vendors are being caught flat-footed.

The Enshittification Response

So legacy BI is under structural pressure, and the AI-native alternatives have become genuinely capable. How are the incumbents responding?

Salesforce and Microsoft have massive AI infrastructure investments to fund. Those investments aren’t paying off yet, but the spending is very real. That money has to come from somewhere.

It’s coming from you.

Watch the pattern: Enterprise pricing increases. Aggressive bundling that makes it harder to reduce seats. New AI features that require premium SKUs. The push to Tableau Cloud with important features like Pulse hidden behind migration. More sunk cost keeping you attached to the platform. The playbook is familiar because it works, at least until customers realize they have options.

The numbers are stark. Power BI Pro went up 40% in April 2025 (from $10 to $14 per user per month). Salesforce has raised prices 9% on average across core products including Tableau, with on-premises Tableau subscriptions jumping up to 33% to push customers toward cloud. These aren’t inflation adjustments. They’re value extraction from captive customers.

The vendor pitch is that their AI capabilities justify the premium. Power BI’s Copilot integration. Salesforce’s Agentforce. The promise is always the same: natural language queries, automated insights, AI-powered dashboards.

The reality? You’re paying enterprise prices for chatbot interfaces to the same dashboard architecture. The fundamental value proposition hasn’t changed. You’re just accessing it through a different input mechanism, one that isn’t particularly good compared to what’s available outside the enterprise BI ecosystem.

Tableau Next has been announced as the future of Salesforce’s BI strategy. But Tableau Next isn’t Tableau in the sense that made Tableau valuable. It’s not a flexible, general-purpose analytics tool meant to solve all sorts of business problems. If you have a customer-data or CRM-focused problem and you’re using Salesforce Data Cloud, it’s probably great. But most organizations have more problems to solve than that.

Meanwhile, the tools that are genuinely rethinking analytics workflows (Sigma’s data apps, code-first frameworks powered by agentic AI) are solving problems Tableau still can’t touch.

What to Actually Do

So those are the countervailing winds reshaping the BI and AI analytics landscape. That’s why Salesforce and Microsoft are resorting to price extraction instead of innovation. If you’re thinking about what to do differently in 2026, here’s a roadmap:

Stop renewing enterprise BI contracts on autopilot. I mean this literally. The value proposition that justified those investments five years ago no longer holds. Every dollar spent on per-seat BI licensing is a dollar not spent on tools that might actually change how your organization works with data. It’s possible to reduce your total Tableau spend on a mix of much-better options and still get the AI capabilities you want.

Push agentic coding tools to your data and analytics teams. This is the highest-leverage investment you can make right now. A data engineer with Claude access can build integrations, transformations, and custom analytics applications faster than the same person constrained to your enterprise platform’s capabilities. The productivity gains are real and immediate.

Start a pilot program for citizen developers. The “analysts can’t code” assumption that justified low-code platforms is dissolving. Your business analysts collaborating with AI coding tools may be more capable than your business analysts constrained to Alteryx workflows. Test this assumption before your next renewal.

Invest in semantic layers, but don’t overinvest in specific implementations. Machine-readable, governed metrics matter for both human and AI consumption. But semantic layer tooling is commoditizing. Choose implementations that play well with multiple downstream consumers (data platforms, AI interfaces, purpose-built applications), not vendor-locked approaches.

Look hard at purpose-built analytics tools. Platforms like Sigma let you build data applications solving complete workflows, not just visualizations but the full loop from insight to action. The gap between “here’s a dashboard” and “here’s a tool that actually does something” is where real value lives.

Evaluate AI-native analytics seriously. If you dismissed these tools a year ago, the landscape has shifted. Tools like TextQL have autonomous reasoning capabilities that now work in production, not just demos. Your legacy vendor’s bolted-on AI features aren’t keeping pace.

Build well-integrated systems. The excuse for tolerating disconnected tools was that integration was expensive and fragile. With agentic coding capabilities, that excuse evaporates. You can build the glue. You can automate the workflows. You can create systems that actually work together rather than collections of vendor platforms that barely talk to each other.

Tools That Serve Users

There’s an optimistic version of this story. For years, analytics infrastructure followed a certain logic: centralized platforms, governed workflows, standardized interfaces. The people using these tools daily had to adapt to the tools, not the other way around. The tools were designed to win procurement battles and satisfy IT requirements, not to help the marketing manager figure out why churn spiked last month.

The shifts I’ve described (metrics becoming machine-readable, AI interfaces becoming capable, code creation costs approaching zero) point toward something different. Tools that can be shaped to how people actually work. Analytics that meet users where they are rather than forcing them into predefined dashboards. Technology that gives people agency rather than constraining them, that amplifies judgment rather than replacing it.

For the first time in a while, that kind of analytics infrastructure is actually buildable. Not because any single vendor figured it out, but because the underlying capabilities have shifted enough that you can assemble something better than what the enterprise platforms are selling.

Three years into the AI era in analytics, the structural shifts are visible but not yet settled. That makes this an interesting time to be rethinking your analytics infrastructure. And a terrible time to be signing long-term contracts with vendors whose value proposition is eroding underneath them.